How to use Go profiling tools

Exploring Profiling Tools for Go: Enhancing Performance Like a Pro

Profiling is the process of analyzing an application's runtime behavior to pinpoint performance issues. It helps developers identify where their code spends most of its time and how memory is allocated and released. Profiling tools for Go offer invaluable information for making informed optimizations and delivering a smooth and efficient user experience.

Key Types of Profiling in Go

CPU Profiling: CPU profiling identifies areas of your code that consume the most CPU time. It helps you understand which functions or methods are taking up the majority of the processing power, allowing you to focus on optimizing those areas.

Memory Profiling: Memory profiling reveals memory allocation patterns and potential memory leaks within your application. It provides insights into how your application uses memory and assists in preventing excessive memory consumption.

Goroutine Profiling: Goroutines are a hallmark of Go's concurrency model. Goroutine profiling aids in understanding how goroutines are being created, executed, and blocked, helping you optimize concurrent execution and resource utilization.

Popular Profiling Tools

pprof: The built-in pprof package is a cornerstone of Go's profiling capabilities. It offers both CPU and memory profiling, along with a web-based visualization tool. By simply importing the net/http/pprof package and exposing endpoints, you can access valuable insights about your application's performance.

go-torch: This tool creates flame graphs from pprof profiles, providing a visual representation of the CPU consumption hierarchy. Flame graphs make it easier to identify hotspots and optimize critical sections of your code.

pprof-utils: This collection of utilities extends the pprof toolset by offering additional functionalities, such as memory profiling for long-running applications and custom visualizations.

Heapster: Heapster is designed specifically for memory profiling in Go. It offers memory allocation stack traces, allowing you to identify memory-hungry sections of your code and optimize memory usage effectively.

trace: The trace package in the Go standard library facilitates tracing the execution of programs. It helps you understand the timing and interactions between goroutines, system calls, and application logic, aiding in fine-tuning concurrency.

Using Profiling Tools in Practice

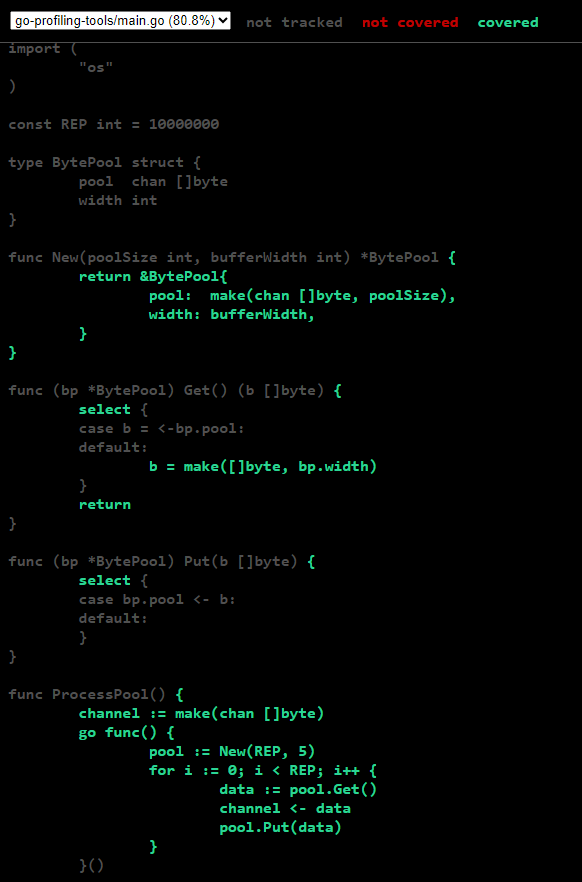

We are going to use a simple example to exercise the basic profiling tools that are part of the Go standard utilities. This sample will have two variants, one of them will allocate an array of bytes in a loop to send them to a receiving goroutine. Meanwhile the other variant will take advantage of a BytePool to reuse the required array of bytes, instead of allocating new ones in each iteration of the loop.

package main

import (

"os"

)

const REP int = 10000000

type BytePool struct {

pool chan []byte

width int

}

func New(poolSize int, bufferWidth int) *BytePool {

return &BytePool{

pool: make(chan []byte, poolSize),

width: bufferWidth,

}

}

func (bp *BytePool) Get() (b []byte) {

select {

case b = <-bp.pool:

default:

b = make([]byte, bp.width)

}

return

}

func (bp *BytePool) Put(b []byte) {

select {

case bp.pool <- b:

default:

}

}

func ProcessPool() {

channel := make(chan []byte)

go func() {

pool := New(REP, 5)

for i := 0; i < REP; i++ {

data := pool.Get()

channel <- data

pool.Put(data)

}

}()

for i := 0; i < REP; i++ {

_ = <-channel

}

}

func Process() {

channel := make(chan []byte)

go func() {

for i := 0; i < REP; i++ {

data := []byte("hello")

channel <- data

}

}()

for i := 0; i < REP; i++ {

_ = <-channel

}

}

func main() {

arguments := os.Args

if (arguments[1] == "process") {

Process()

} else if (arguments[1] == "processPool") {

ProcessPool()

}

}

Garbage Collection

GODEBUG=gctrace=1 go run main.go process

gc 1 @0.071s 0%: 0.043+0.31+0.022 ms clock, 0.34+0.073/0.27/0.31+0.18 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 2 @0.080s 0%: 0.022+0.68+0.002 ms clock, 0.17+0.38/0.62/0.008+0.022 ms cpu, 3->4->1 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 3 @0.083s 0%: 0.034+0.66+0.028 ms clock, 0.27+0/0.70/0.37+0.22 ms cpu, 3->3->1 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 4 @0.089s 0%: 0.010+0.37+0.002 ms clock, 0.081+0.19/0.43/0.22+0.018 ms cpu, 3->4->1 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 5 @0.091s 0%: 0.11+0.59+0.11 ms clock, 0.88+0.10/0.51/0.14+0.92 ms cpu, 3->4->1 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 6 @0.093s 0%: 0.008+0.33+0.002 ms clock, 0.070+0.089/0.32/0.34+0.020 ms cpu, 3->3->1 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P# command-line-arguments

gc 1 @0.002s 5%: 0.050+0.63+0.024 ms clock, 0.40+0.16/0.96/0.42+0.19 ms cpu, 3->4->2 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 2 @0.006s 6%: 0.044+1.2+0.036 ms clock, 0.35+0.49/1.4/0.53+0.29 ms cpu, 6->6->4 MB, 6 MB goal, 0 MB stacks, 0 MB globals, 8 P

# command-line-arguments

gc 1 @0.001s 6%: 0.018+0.54+0.022 ms clock, 0.14+0.27/0.34/0.56+0.17 ms cpu, 4->6->5 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 2 @0.003s 5%: 0.008+0.97+0.037 ms clock, 0.070+0.051/0.40/0.72+0.29 ms cpu, 9->9->9 MB, 11 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 1 @0.111s 0%: 0.019+0.23+0.079 ms clock, 0.15+0.052/0.041/0+0.63 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 2 @0.227s 0%: 0.14+0.20+0.064 ms clock, 1.1+0.031/0.10/0+0.51 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 3 @0.348s 0%: 0.091+0.23+0.022 ms clock, 0.72+0.032/0.085/0.030+0.17 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 4 @0.466s 0%: 0.048+0.29+0.040 ms clock, 0.38+0.030/0.044/0.024+0.32 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 5 @0.551s 0%: 0.078+0.20+0.032 ms clock, 0.62+0.027/0.074/0.016+0.25 ms cpu, 2->2->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 6 @0.665s 0%: 0.074+0.36+0.082 ms clock, 0.59+0.032/0.10/0+0.66 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 7 @0.752s 0%: 0.041+0.36+0.079 ms clock, 0.33+0.028/0.063/0.016+0.63 ms cpu, 2->2->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 8 @0.866s 0%: 0.14+0.50+0.072 ms clock, 1.1+0.062/0.060/0+0.57 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 9 @0.953s 0%: 0.066+0.27+0.089 ms clock, 0.52+0.028/0.055/0+0.71 ms cpu, 2->2->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 10 @1.069s 0%: 0.076+0.36+0.023 ms clock, 0.61+0.041/0.049/0+0.18 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 11 @1.185s 0%: 0.074+0.30+0.083 ms clock, 0.59+0.036/0.050/0+0.66 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 12 @1.301s 0%: 0.077+0.26+0.035 ms clock, 0.61+0.028/0.075/0.040+0.28 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 13 @1.386s 0%: 0.087+0.38+0.075 ms clock, 0.69+0.030/0.049/0+0.60 ms cpu, 2->2->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 14 @1.503s 0%: 0.081+0.20+0.002 ms clock, 0.65+0.068/0.045/0+0.016 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

GODEBUG=gctrace=1 go run main.go processPool

gc 1 @0.053s 0%: 0.080+0.24+0.023 ms clock, 0.64+0.039/0.28/0.36+0.19 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 2 @0.060s 0%: 0.038+0.74+0.002 ms clock, 0.31+0.38/0.79/0.37+0.022 ms cpu, 3->4->1 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 3 @0.062s 0%: 0.031+0.49+0.027 ms clock, 0.25+0.15/0.46/0.48+0.21 ms cpu, 3->3->0 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 4 @0.066s 0%: 0.010+0.35+0.002 ms clock, 0.084+0.040/0.39/0.33+0.023 ms cpu, 3->3->1 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 5 @0.068s 0%: 0.008+0.35+0.026 ms clock, 0.065+0.052/0.47/0.37+0.21 ms cpu, 3->3->1 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 6 @0.070s 1%: 0.010+0.46+0.022 ms clock, 0.083+0.040/0.35/0.45+0.18 ms cpu, 3->4->1 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

# command-line-arguments

gc 1 @0.002s 5%: 0.050+0.63+0.024 ms clock, 0.40+0.16/0.96/0.42+0.19 ms cpu, 3->4->2 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 2 @0.006s 6%: 0.044+1.2+0.036 ms clock, 0.35+0.49/1.4/0.53+0.29 ms cpu, 6->6->4 MB, 6 MB goal, 0 MB stacks, 0 MB globals, 8 P

# command-line-arguments

gc 1 @0.001s 5%: 0.006+0.45+0.003 ms clock, 0.054+0.25/0.43/0.21+0.024 ms cpu, 4->5->5 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 2 @0.002s 7%: 0.007+0.76+0.025 ms clock, 0.063+0.048/0.99/0.35+0.20 ms cpu, 9->9->9 MB, 11 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 1 @0.018s 13%: 0.009+11+0.003 ms clock, 0.075+9.3/21/45+0.030 ms cpu, 229->229->228 MB, 4 MB goal, 0 MB stacks, 0 MB globals, 8 P

Heap escape analysis

go run -gcflags '-m' main.go process

# command-line-arguments

./main.go:14:6: can inline New

./main.go:21:6: can inline (*BytePool).Get

./main.go:30:6: can inline (*BytePool).Put

./main.go:39:5: can inline ProcessPool.func1

./main.go:40:14: inlining call to New

./main.go:42:20: inlining call to (*BytePool).Get

./main.go:44:12: inlining call to (*BytePool).Put

./main.go:55:5: can inline Process.func1

./main.go:15:9: &BytePool{...} escapes to heap

./main.go:21:7: bp does not escape

./main.go:25:11: make([]byte, bp.width) escapes to heap

./main.go:30:7: bp does not escape

./main.go:30:25: leaking param: b

./main.go:39:5: func literal escapes to heap

./main.go:40:14: &BytePool{...} does not escape

./main.go:42:20: make([]byte, bp.width) escapes to heap

./main.go:55:5: func literal escapes to heap

./main.go:57:18: ([]byte)("hello") escapes to heap

Memory benchmark

package main

import "testing"

func TestProcess(t *testing.T){

Process()

}

func BenchmarkProcess(b *testing.B){

for n := 0; n < b.N; n++ {

Process()

}

}

func TestProcessPool(t *testing.T){

ProcessPool()

}

func BenchmarkProcessPool(b *testing.B){

for n := 0; n < b.N; n++ {

ProcessPool()

}

}

go test -bench . -benchmem

goos: linux

goarch: amd64

pkg: go-profiling-tools

cpu: 11th Gen Intel(R) Core(TM) i7-1185G7 @ 3.00GHz

BenchmarkProcess-8 1 1585461568 ns/op 53334224 B/op 10000006 allocs/op

BenchmarkProcessPool-8 1 2078355011 ns/op 240001664 B/op 6 allocs/op

PASS

ok go-profiling-tools 7.252s

- allocs/op: The average number of memory allocations per benchmark iteration.

- alloced/op: The average amount of memory allocated per benchmark iteration.

- bytes/op: The average amount of memory allocated per operation (operation in the benchmark code).

- mallocs/op: The number of heap allocations (mallocs) per benchmark iteration.

- frees/op: The number of heap deallocations (frees) per benchmark iteration.

CPU profiling

sudo apt install graphviz

go test -cpuprofile cpu.out && go tool pprof -svg -output=cpu.out.svg cpu.out

PASS

ok go-profiling-tools 3.917s

Generating report in cpu.out.svg

|

| cpu.out.svg |

RAM profiling

go test -test.memprofilerate=1 -memprofile mem.out && go tool pprof -svg -output=mem.out.svg mem.out

PASS

ok go-profiling-tools 8.389s

Generating report in mem.out.svg

|

| mem.out.svg |

Coverage profiling

go test -coverprofile=coverage.out && go tool cover -html=coverage.out -o coverage.out.html

PASS

coverage: 80.8% of statements

ok go-profiling-tools 3.948s

|

| coverage.out.html |

Conclusion

Profiling tools are indispensable assets for any Go developer aiming to create performant applications. By harnessing the capabilities of CPU, memory, and goroutine profiling, you can unlock the full potential of Go's efficiency and concurrency model. Whether you're building microservices, web applications, or system-level software, profiling tools provide the roadmap to optimizing performance, minimizing resource consumption, and delivering exceptional user experiences. So, embrace the power of profiling tools and embark on a journey toward crafting high-performance Go applications that stand out in today's competitive software landscape.